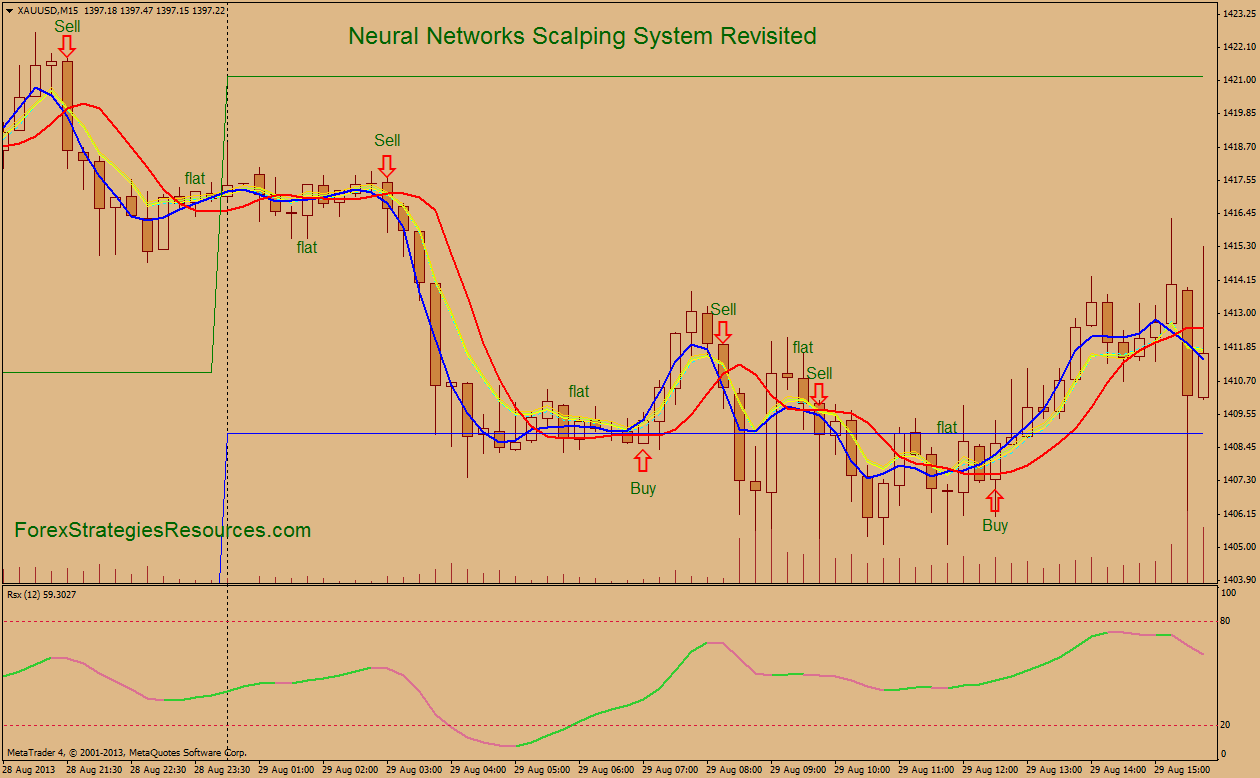

Neural net trading system

Deep Blue was the first computer that won a chess world championship.

That was , and it took 20 years until another program, AlphaGo , could defeat the best human Go player. Deep Blue was a model based system with hardwired chess rules. AlphaGo is a data-mining system, a deep neural network trained with thousands of Go games.

Not improved hardware, but a breakthrough in software was essential for the step from beating top Chess players to beating top Go players. This method does not care about market mechanisms. It just scans price curves or other data sources for predictive patterns. In fact the most popular — and surprisingly profitable — data mining method works without any fancy neural networks or support vector machines. A learning algorithm is fed with data samples , normally derived in some way from historical prices.

Each sample consists of n variables x Each sample also normally includes a target variable y , like the return of the next trade after taking the sample, or the next price movement.

Such a model can be a function with prediction rules in C code, generated by the training process. Or it can be a set of connection weights of a neural network. The predictors must carry information sufficient to predict the target y with some accuracy.

They m ust also often fulfill two formal requirements. First, all predictor values should be in the same range, like So you need to normalize them in some way before sending them to the machine. Second, the samples should be balanced , i. So there should be about as many winning as losing samples. Regression algorithms predict a numeric value, like the magnitude and sign of the next price move. Some algorithms, such as neural networks or support vector machines, can be run either in regression or in classification mode.

A few algorithms learn to divide samples into classes without needing any target y. Therefore financial prediction is one of the hardest tasks in machine learning.

More complex algorithms do not necessarily achieve better results. The selection of the predictors is critical to the success.

It is no good idea to use lots of predictors, since this simply causes overfitting and failure in out of sample operation.

Artificial neural network - Wikipedia

Therefore data mining strategies often apply a preselection algorithm that determines a small number of predictors out of a pool of many. The preselection can be based on correlation between predictors, on significance, on information content, or simply on prediction success with a test set.

Practical experiments with feature selection can be found in a recent article on the Robot Wealth blog. The client just wanted trade signals from certain technical indicators, filtered with other technical indicators in combination with more technical indicators.

When asked how this hodgepodge of indicators could be a profitable strategy, he normally answered: Although most of those systems did not pass a WFA test and some not even a simple backtest , a surprisingly large number did. And those were also often profitable in real trading. The client had systematically experimented with technical indicators until he found a combination that worked in live trading with certain assets. This way of trial-and-error technical analysis is a classical data mining approach, just executed by a human and not by a machine.

I can not really recommend this method — and a lot of luck, not to speak of money, is probably involved — but I can testify that it sometimes leads to profitable systems. There are software packages for that purpose. They search for patterns that are profitable by some user-defined criterion, and use them to build a specific pattern detection function. This C function returns 1 when the signals match one of the patterns, otherwise 0. You can see from the lengthy code that this is not the fastest way to detect patterns.

A better method, used by Zorro when the detection function needs not be exported, is sorting the signals by their magnitude and checking the sort order.

Can price action trading really work? One can at best imagine that sequences of price movements cause market participants to react in a certain way, this way establishing a temporary predictive pattern. However the number of patterns is quite limited when you only look at sequences of a few adjacent candles. The next step is comparing candles that are not adjacent, but arbitrarily selected within a longer time period. It is hard to imagine how a price move can be predicted by some candle patterns from weeks ago.

Still, a lot effort is going into that. A fellow blogger, Daniel Fernandez, runs a subscription website Asirikuy specialized on data mining candle patterns. He refined pattern trading down to the smallest details, and if anyone would ever achieve any profit this way, it would be him.

If profitable price action systems really exist, apparently no one has found them yet.

Predicting Stock Prices - Learn Python for Data Science #4The simple basis of many complex machine learning algorithms: They are calculated for minimizing the sum of squared differences between the true y values from the training samples and their predicted y from the above formula:. For normal distributed samples, the minimizing is possible with some matrix arithmetic, so no iterations are required. Simple linear regression is available in most trading platforms, f. Multivariate linear regression is available in the R platform through the lm..

A variant is polynomial regression. The polyfit function of MatLab, R, Zorro, and many other platforms can be used for polynomial regression. Often referred to as a neural network with only one neuron.

In fact a perceptron is a regression function like above, but with a binary result, thus called logistic regression. Linear or logistic regression can only solve linear problems. Many do not fall into this category — a famous example is predicting the output of a simple XOR function. And most likely also predicting prices or trade returns. An artificial neural network ANN can tackle nonlinear problems.

Any perceptron is a neuron of the net. Its output goes to the inputs of all neurons of the next layer, like this:. Like the perceptron, a neural network also learns by determining the coefficients that minimize the error between sample prediction and sample target.

But this requires now an approximation process, normally with backpropagating the error from the output to the inputs, optimizing the weights on its way. This process imposes two restrictions. First, the neuron outputs must now be continuously differentiable functions instead of the simple perceptron threshold. In this case the network can be used for regression, for predicting a numeric value instead of a binary outcome. Deep learning methods use neural networks with many hidden layers and thousands of neurons, which could not be effectively trained anymore by conventional backpropagation.

Several methods became popular in the last years for training such huge networks. They usually pre-train the hidden neuron layers for achieving a more effective learning process.

A Restricted Boltzmann Machine RBM is an unsupervised classification algorithm with a special network structure that has no connections between the hidden neurons.

Those methods allow very complex networks for tackling very complex learning tasks. Deep learning networks are available in the deepnet and darch R packages. Deepnet provides an autoencoder, Darch a restricted Boltzmann machine. Like a neural network, a support vector machine SVM is another extension of linear regression. When we look at the regression formula again,. This way we have a binary classifier with optimal separation of winning and losing samples.

No flat plane can be squeezed between winners and losers. If it could, we had simpler methods to calculate that plane, f. But for the common case we need the SVM trick: Adding more dimensions to the feature space.

For this the SVM algorithm produces more features with a kernel function that combines any two existing predictors to a new feature.

Neural Networks: Forecasting Profits

This is analogous to the step above from the simple regression to polynomial regression, where also more features are added by taking the sole predictor to the n-th power. The more dimensions you add, the easier it is to separate the samples with a flat hyperplane. This plane is then transformed back to the original n-dimensional space, getting wrinkled and crumpled on the way.

By clever selecting the kernel function, the process can be performed without actually computing the transformation. Like neural networks, SVMs can be used not only for classification, but also for regression.

They also offer some parameters for optimizing and possibly overfitting the prediction process:. In the next and final part of this series I plan to describe a trading strategy using this SVM.

It needs no training. So the samples are the model. You could use this algorithm for a trading system that learns permanently by simply adding more and more samples. The nearest neighbor algorithm computes the distances in feature space from the current feature values to the k nearest samples. The algorithm simply predicts the target from the average of the k target variables of the nearest samples, weighted by their inverse distances. It can be used for classification as well as for regression.

Software tricks borrowed from computer graphics, such as an adaptive binary tree ABT , can make the nearest neighbor search pretty fast. In my past life as computer game programmer, we used such methods in games for tasks like self-learning enemy intelligence.

You can call the knn function in R for nearest neighbor prediction — or write a simple function in C for that purpose. This is an approximation algorithm for unsupervised classification.

It has some similarity, not only its name, to k-nearest neighbor. For classifying the samples, the algorithm first places k random points in the feature space.

Then it assigns to any of those points all the samples with the smallest distances to it. The point is then moved to the mean of these nearest samples.

This will generate a new samples assignment, since some samples are now closer to another point. We now have k classes of samples, each in the neighborhood of one of the k points. This simple algorithm can produce surprisingly good results. In R, the kmeans function does the trick. An example of the k-means algorithm for classifying candle patterns can be found here: Unsupervised candlestick classification for fun and profit. P Y X is the probability that event Y f.

If we are naive and assume that all events X are independent of each other, we can calculate the overall probability that a sample is winning by simply multiplying the probabilities P X winning for every event X. This way we end up with this formula:. For the formula to work, the features should be selected in a way that they are as independent as possible, which imposes an obstacle for using Naive Bayes in trading.

Numerical predictors can be converted to events by dividing the number into separate ranges. Any decision is either the presence of an event or not in case of non-numerical features or a comparison of a feature value with a fixed threshold. How is such a tree produced from a set of samples?

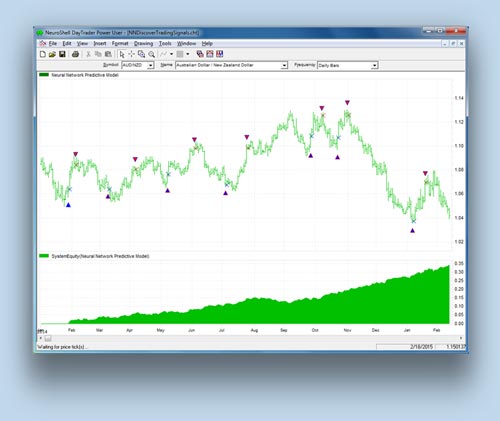

Forex/Stock Day Trading Software with Neural Net Forecasting

There are several methods; Zorro uses the Shannon i nformation entropy , which already had an appearance on this blog in the Scalping article. The dividing threshold t is selected so that the information gain — the difference of information entropy of the whole space, to the sum of information entropies of the two divided sub-spaces — is maximum. This is the case when the samples in the subspaces are more similar to each other than the samples in the whole space.

Each split is equivalent to a comparison of a feature with a threshold.

By repeated splitting, we soon get a huge tree with thousands of threshold comparisons. Then the process is run backwards by pruning the tree and removing all decisions that do not lead to substantial information gain. Finally we end up with a relatively small tree as in the code above. Decision trees have a wide range of applications. They can produce excellent predictions superior to those of neural networks or support vector machines.

But they are not a one-fits-all solution, since their splitting planes are always parallel to the axes of the feature space. This somewhat limits their predictions. They can be used not only for classification, but also for regression, for instance by returning the percentage of samples contributing to a certain branch of the tree.

The best known classification tree algorithm is C5. For improving the prediction even further or overcoming the parallel-axis-limitation, an ensemble of trees can be used, called a random forest. The prediction is then generated by averaging or voting the predictions from the single trees. Random forests are available in R packages randomForest , ranger and Rborist.

There are many different data mining and machine learning methods at your disposal. There is no doubt that machine learning has a lot of advantages. You can concentrate on pure mathematics.

Machine learning is a much more elegant, more attractive way to generate trade systems. It has all advantages on its side but one. Every second week a new paper about trading with machine learning methods is published a few can be found below.

Please take all those publications with a grain of salt. With such a system the involved scientists should be billionaires meanwhile. So maybe a lot of selection bias went into the results. And from what one hears about the algorithmic methods by successful hedge funds, machine learning seems still rarely to be used.

But maybe this will change in the future with the availability of more processing power and the upcoming of new algorithms for deep learning.

The next part of this series will deal with the practical development of a machine learning strategy. There is a lot of potential in these approach towards the market. Btw are you using the code editor which comes with zorro?

The colorful script is produced by WordPress. Is it then possible that notepad detects the zorro variables in the scripts? I mean that BarPeriod is remarked as it is with the zorro editor? Concur with the final paragraph. But reproducing their results remains elusive. ML fails in live? Maybe the training of the ML has to be done with price data that include as well historical spread, roll, tick and so on?

I think reason 1 for live failure is data mining bias, caused by biased selection of inputs and parameters to the algo. Thanks to the author for the great series of articles. This happens when the price finally does go to the right direction but before that it may make some steps against us. So the criteria is which value is higher: If you just want to play around with some machine learning, I implemented a very simple ML tool in python and added a GUI.

Thanks JCL I found very interesting your article. I would like to ask you, from your expertise in trading, where can we download reliable historical forex data? I consider it very important due to the fact that Forex market is decentralized. There is no really reliable Forex data, since every Forex broker creates their own data. They all differ slightly dependent on which liquidity providers they use.

FXCM has relatively good M1 and tick data with few gaps. You can download it with Zorro. The actual trading rules are then derived from the interactions between these time series. So in essence I am not just blindly throwing recent market data into an ML model in an effort to predict price action direction, but instead develop a framework based upon sound investment principles in order to point the models in the right direction.

Thanks for posting this great mini series JCL. I recently studied a few latest papers about ML trading, deep learning especially.

.jpg)

Yet I found that most of them valuated the results without risk-adjusted index, i. Also, they seldom mentioned about the trading frequency in their experiment results, making it hard to valuate the potential profitability of those methods. Do you have any good suggestions to deal with those issues? ML papers normally aim for high accuracy. Equity curve variance is of no interest.

This is sort of justified because the ML prediction quality determines accuracy, not variance. Of course, if you want to really trade such a system, variance and drawdown are important factors. So I could directly use them. I was referring to the Indicator Soup strategies. But I can tell that coming up with a profitable Indicator Soup requires a lot of work and time. Well, i am just starting a project which use simple EMAs to predict price, it just select the correct EMAs based on past performance and algorithm selection that make some rustic degree of intelligence.

Thanks for the good writeup. It in reality used to be a leisure account it. Look complicated to more delivered agreeable from you! By the way, how could we be in contact? There are following issues with ML and with trading systems in general which are based on historical data analysis:. Future price movement is independent and not related to the price history.

There is absolutely no reliable pattern which can be used to systematically extract profits from the market. Applying ML methods in this domain is simply pointless and doomed to failure and is not going to work if you search for a profitable system.

Of course you can curve fit any past period and come up with a profitable system for it. The only thing which determines price movement is demand and supply and these are often the result of external factors which cannot be predicted. These sort of events will cause significant shifts in the demand supply structure of the FX market. As a consequence, prices begin to move but nobody really cares about price history just about the execution of the incoming orders.

An automated trading system can only be profitable if it monitors a significant portion of the market and takes the supply and demand into account for making a trading decision.

Neural Network Software and Genetic Algorithm Software

But this is not the case with any of the systems being discussed here. I will be still keeping an eye on your posts as I like your approach and the scientific vigor you apply.

Your blog is the best of its kind — keep the good work! Instead you have to know when and how the institutions will execute market moving orders and front run them. Thanks for the extensive comment. I often hear these arguments and they sound indeed intuitive, only problem is that they are easily proven wrong. The scientific way is experiment, not intuition. Simple tests show that past and future prices are often correlated — otherwise every second experiment on this blog had a very different outcome.

The second one has always the edge because they sell at the ask and buy at the bid, pocketing the spread as an additional profit to any strategy they might be running. I am not so sure if they have not transitioned over the time to the sell side in order to stay profitable. There is absolutely no information available about the nature of their business besides the vague statement that they are using solely quantitative algorithmic trading models….

Regarding the use of some of these algorithms, a common complaint which is cited is that financial data is non-stationary…Do you find this to be a problem? Yes, this is a problem for sure. Returns are not any more stationary than other financial data. Hello sir, I developed some set of rules for my trading which identifies supply demand zones than volume and all other criteria. Can you help me to make it into automated system?? If i am gonna do that myself then it can take too much time.

Please contact me at svadukia gmail. Sure, please contact my employer at info opgroup. Your email address will not be published. Please enter at least 2 characters.

Please enter the correct number. Please enter at least 10 characters. Skip to content The Financial Hacker A new view on algorithmic trading. Machine learning principles A learning algorithm is fed with data samples , normally derived in some way from historical prices. Linear regression The simple basis of many complex machine learning algorithms: They are calculated for minimizing the sum of squared differences between the true y values from the training samples and their predicted y from the above formula: Perceptron Often referred to as a neural network with only one neuron.

N eural networks Linear or logistic regression can only solve linear problems. Its output goes to the inputs of all neurons of the next layer, like this: Deep learning Deep learning methods use neural networks with many hidden layers and thousands of neurons, which could not be effectively trained anymore by conventional backpropagation. Support vector machines Like a neural network, a support vector machine SVM is another extension of linear regression. They also offer some parameters for optimizing and possibly overfitting the prediction process: You normally use a RBF kernel radial basis function, a symmetric kernel , but you also have the choice of other kernels, such as sigmoid, polynomial, and linear.

K-Means This is an approximation algorithm for unsupervised classification. This way we end up with this formula: The Naive Bayes algorithm is available in the ubiquitous e R package. Conclusion There are many different data mining and machine learning methods at your disposal. Papers Classification using deep neural networks: April 9, at April 11, at Best Links of the Last Two Weeks and A Shout-Out to QuantNews Quantocracy.

May 1, at May 8, at May 9, at May 22, at August 8, at September 1, at September 2, at September 7, at Thanks for writing such a great article series JCL… a thoroughly enjoyable read! Anyway, best of luck with your trading and keep up the great articles! October 20, at October 23, at November 15, at November 27, at December 19, at February 6, at February 21, at There are following issues with ML and with trading systems in general which are based on historical data analysis: The only sure winners in this scenario will be the technology and tool vendors.

February 22, at February 23, at Thanks for the informative post! March 25, at Leave a Reply Cancel reply Your email address will not be published.

Download Zorro Download R Scripts Scripts Scripts